Yanwei Yue 岳彦伟

B.S., Tongji University

B.S., Tongji University

I'm Yanwei Yue, a senior undergraduate student in the School of Computer Science, Tongji University. I am broadly interested in LLM Agents and efficient AI.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

Tongji University

B.S. in Data Science and Big Data Sep. 2020 - Jul. 2025

Honors & Awards

- National Scholarship in Tongji University 20232024

- First Prize in China Undergraduate Mathematical Contest in Modelling 2023

- Second Prize of Global Campus Artificial Intelligence Algorithm Elite Competition 2023

- the 7th Place in CCF Sentence Commutation Prediction Competition 2022

- Meritorious Winner of American Methematical Contest In Modeling 2023

- First Prize of National College Statistical Modeling Competition in Shanghai Area 2023

Research interests

- LLM Agent : Multi-agent Collaboration, Social Scene Simulation

- Efficient AI : Sparsity, Model Lightweighting

- Data Mining : Graph Learning, Graph Sparsification

News

Selected Publications (view all )

Cut the crap: An economical communication pipeline for llm-based multi-agent systems

Guibin Zhang†, Yanwei Yue†, Zixun Li†, Sukwon Yun, Guancheng Wan, Kun Wang*, Dawei Chen, Jeffrey Xu Yu, Tianlong Chen († equal contribution, * corresponding author)

International Conference on Learning Representations (ICLR) 2025 Poster

We propose an economical, simple, and robust multi-agent communication framework, termed AgentPrune, which can seamlessly integrate into mainstream multi-agent systems and prunes redundant or even malicious communication messages. Technically, AgentPrune is the first to identify and formally define the communication redundancy issue present in current LLM-based multi-agent pipelines, and efficiently performs one-shot pruning on the spatialtemporal message-passing graph, yielding a token-economic and high-performing communication topology.

Fast Track to Winning Tickets: Repowering One-Shot Pruning for Graph Neural Networks

Yanwei Yue†, Guibin Zhang†, Haoran Yang, Dawei Cheng* († equal contribution, * corresponding author)

Association for the Advancement of Artificial Intelligence(AAAI) 2025 Poster

We propose a pruning fast track based on one-shot, which prunes the graph to the target sparsity at one time, and then gradually optimizes the edge mask.Our framework achieves a double-win situation of graph lottery tickets with higher sparsity and faster speed.

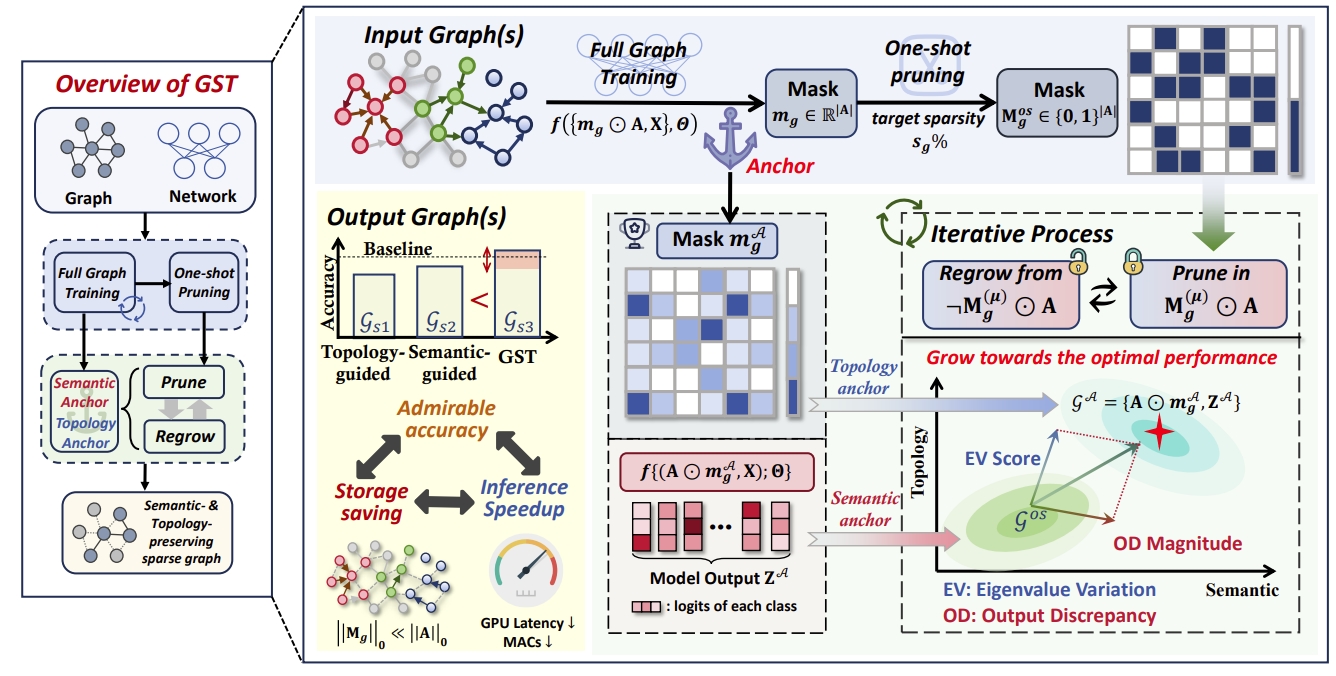

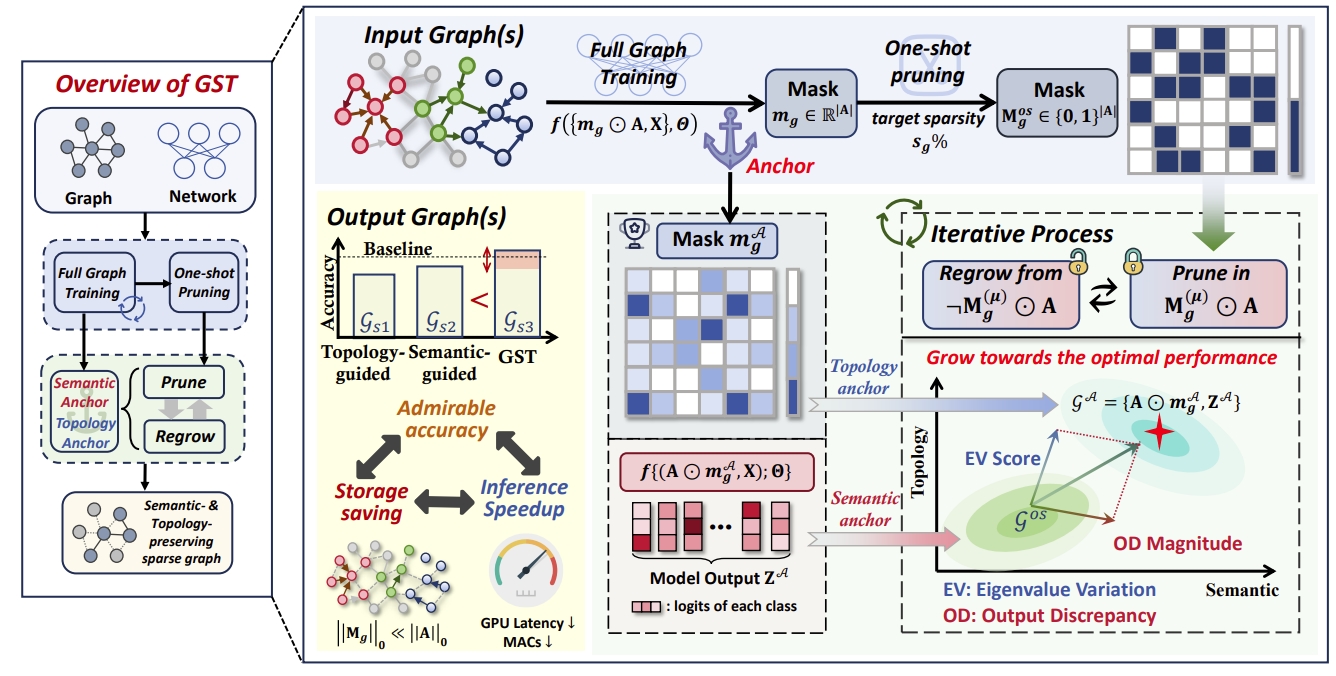

Two Heads Are Better Than One: Boosting Graph Sparse Training via Semantic and Topological Awareness

Guibin Zhang†, Yanwei Yue†, Kun Wang*, Junfeng Fang, Yongduo Sui, Kai Wang, Yuxuan Liang, Dawei Cheng, Shirui Pan, Tianlong Chen* († equal contribution, * corresponding author)

International Conference on Machine Learning (ICML) 2024 Poster

We combine topology-guided pruning and semantic-based pruning, conduct limited training on the original graph to build reliable anchor.And then dynamic sparse training is performed using anchor as semantic and topological benchmarks to obtain better sparse graphs.